Transaction-Processing Framework

Typically, businesses find it necessary to develop custom B2B transaction-processing configurations for their trading partners. When developing custom configurations, the cost, effort, and time required to onboard new trading partners increases, thereby limiting the business relationships your company can pursue. The following explains how the PortX Transaction-Processing Framework works to dynamically process B2B transactions across a multitude of common formats and protocols.

Transaction-Processing Solution

PortX includes a Transaction-processing solution which serves as a routing and translation layer between your internal Applications and the following common formats, along with others:

-

X12

-

EDIFACT

-

XML

-

CSV

The Transaction-processing solution serves the following common transport protocols, along with others:

-

AS2

-

FTP/S

Routing Engine

A key feature of the Transaction-Processing Framework is the PortX Routing Engine.

The Routing Engine processes a wide range of transmissions from different partners, dynamically applying rules and configuration data stored in PortX.

The Routing Engine allows non-programmers in your organization such as data analysts to use PortX, and onboard new trading partner relationships by supporting their transaction configurations without having to develop, test, or deploy custom-made components. For more detailed information about how PortX works, see Actors, Relationships and Artifacts.

Functional Architecture

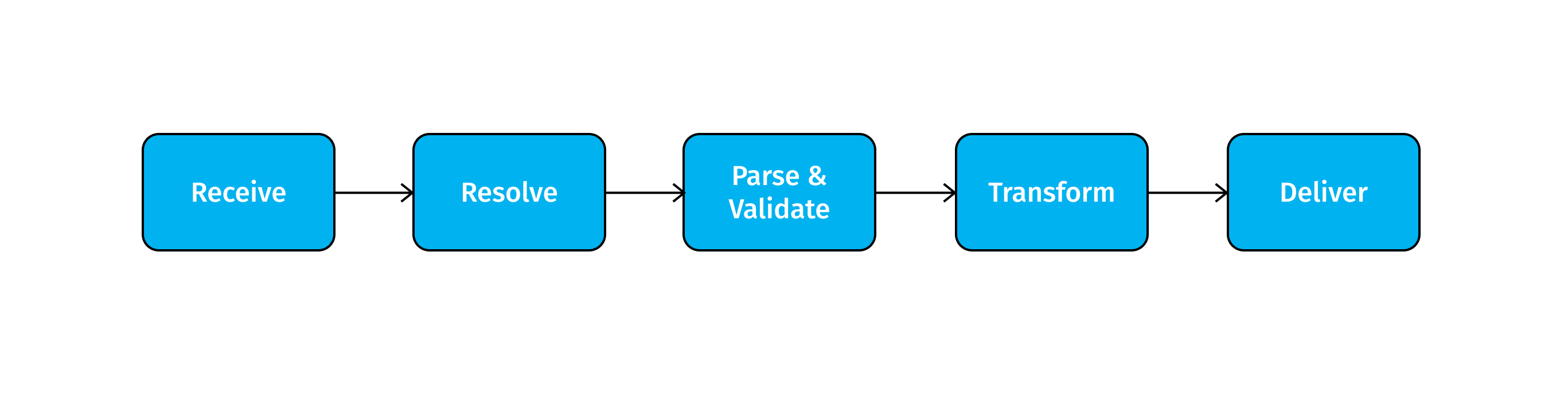

B2B transaction processing proceeds through a combination of the stages shown in Functional Architecture.

| Processing stages and their sequence may vary from implementation to implementation. |

Receive

Each incoming transaction from a partner or internal system is delivered through a transport protocol Endpoint that is configured to receive the message.

Resolve

This stage identifies the partner and the transaction type using data inside a document or in the incoming metadata (such as HTTP headers or filenames), then retrieves corresponding processing details from PortX. These details include:

Transform

This stage translates each message into the appropriate format for the target service being invoked. This translation is performed dynamically by retrieving a Map from the Trading Partner Management (TPM) API.

Parse and Validate

This stage confirms that incoming data is configured in the correct format. Validation is then performed using a Schema retrieved from the TPM API.

Deliver

This stage uses Endpoint configuration data retrieved from the TPM API to deliver the message to an Endpoint address.

Technical Architecture

The following Conceptual View of Transaction Processing Framework shows the primary components and how these components interact to process B2B transactions.

Components

PortX

PortX (PortX) is the B2B Transaction Processing user interface, enabling you to:

Trading Partner Management (TPM) API

The Trading Partner Management (TPM) API manages the storage and retrieval of configuration data for your trading partners, including the details for processing transactions.

Tracking API

The Tracking API manages the storage and retrieval of metadata from your processed transactions.

For example:

-

Sender

-

Receiver

-

Time stamps

-

Message type

-

Acknowledgement status

This includes, but is not limited to correlation logic for matching acknowledgements to original messages and identifying duplicate messages.

Transaction-Processing Stages

PortX provides a routing engine designed to meet the needs of most customer scenarios. We also allow for extensibility where needed.

| At this time the flows identified below must be developed by the customer. PortX may provide these components as part of the product in the future. However, in order to provide full extensibility and customization, the option will remain for customers to provide their own implementations. |

Receive Stages

Each receive endpoint corresponds to a component that consists of the appropriate protocol connector and the appropriate endpoint configuration. After receiving a message over a particular protocol, each receive flow:

-

Tracks the message using the PortX Connector in order to persist a copy of the message as it was received from the partner.

-

Places a queue message with headers populated with any important metadata from the inbound protocol, such as transport headers and filenames, on the Resolve queue.

Receive flows are activated dynamically by a Receive Endpoint listener flow, which polls the TPM system for the list of endpoints that should be active. This flow creates a specific endpoint for each flow based on a template for the required transport protocol. It then dynamically instantiates that flow into the ESB and starts it, so that the required connector endpoint is active and listening for messages.

Resolve Stage

-

Pulls together from the message and any transport headers the needed metadata fields for identifying the specific document type.

-

Passes the metadata fields to the TPM service to look up the document type and associated configuration settings (Map, Schema, target Endpoint), and adds this information to the context headers that travel with the message to be used by later stages.

-

Passes the message to the next processing stage.

Transform Stage

-

Dynamically applies the configured mapping script from the context header to translate the message into the canonical format for the target Business Service.

-

Does any necessary data translation, such as resolving partner values to your company’s values using functions, and flows.

-

Uses the PortX Connector to track the mapped, canonical version of the message.

-

Passes the updated message body to the next processing stage.

Validate Stage

-

Dynamically applies the configured schema script to validate that the message is in the required format.

-

Uses the PortX Connector to track the validation result for the message.

-

Passes the message to the next processing stage.

Deliver Stage

-

Invokes the target service by passing the transformed message to the configured transport endpoint.

-

Uses the PortX Connector to track the result from the target service.

Message Payload Persistence Stage

This is an optional flow that can be implemented to store message payloads at various stages. It receives a message from the PortX Connector, persists that message payload to the desired data store, and returns a URL that can be used to retrieve the message later using the Message Payload Retrieval Stage. The URL is stored in the related tracking data stored in the Tracking API in PortX and displayed to the user in the context of the transaction. Clicking this link will invoke the Message Payload Retrieval Stage and display the message payload in a pop-up window.

Message Payload Retrieval API

The Message Payload Retrieval API Stage is used to retrieve the message payload with a URL (which contains the specific transactionId of the message to be retrieved).

Business Service APIs

For each target internal service, there is typically a component that exposes a REST-based API and communicates with the backend system using the appropriate connector or connectors. These Business Service APIs are not technically part of the B2B system, but are often part of the overall solution.

Replay Stage

The replay flow coordinates replaying transactions. It polls the Tracking service for transactions that have been marked for replay. When it finds transactions that need to be replayed it:

-

Pulls the original message body and headers from the Tracking API and the Message Payload Retrieval API.

-

Constructs a new message with the original payload and headers and passes it to the Resolve flow to reprocess the transaction.

-

Tracks the fact that the transaction has been replayed.

-

Updates the TPM service to indicate that the replay is complete.